Regulatory Sandboxes for AI in Drug Development and Clinical Trials

Regulatory sandboxes provide a controlled, supervised environment where innovators and regulators can experiment with novel technologies under real-world conditions but outside the full weight of usual regulations. In the context of artificial intelligence (AI) in drug development and clinical trials, sandboxes aim to foster innovation (e.g. AI-driven trial design, AI-generated endpoints) while maintaining patient safety and data integrity.

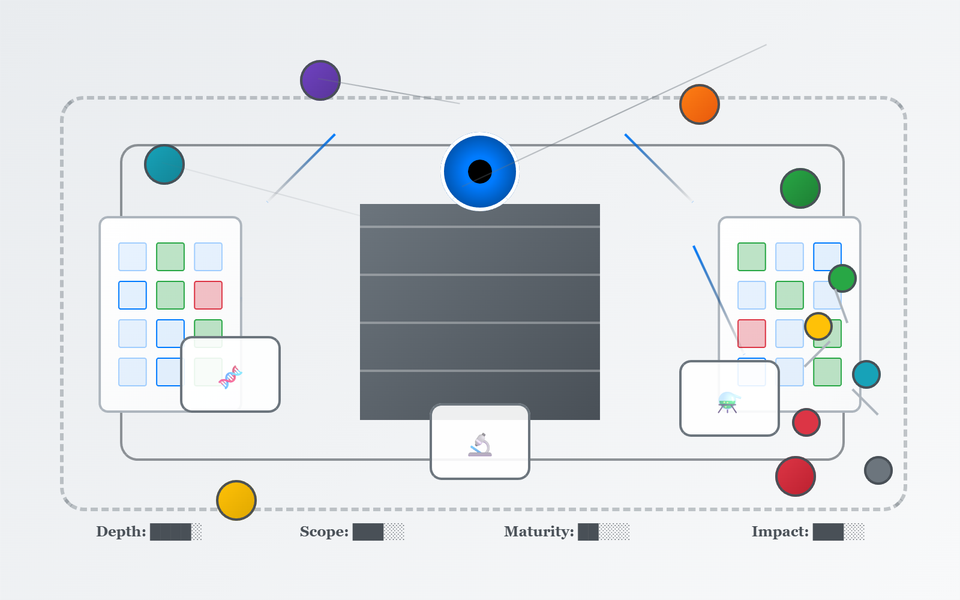

This report examines the status and features of AI-focused regulatory sandboxes (existing or proposed) across six major medicines regulators: the U.S. FDA, the European Medicines Agency (EMA), the UK’s MHRA, SwissMedic, the Israel Ministry of Health, and the Norwegian Medicines Agency. For each, we detail whether an AI sandbox is operational or planned, key features (scope, duration, eligibility, regulatory involvement, AI use cases), example use cases or pilots, stakeholder engagement, and any outputs (e.g. publications or policy changes). We then provide a comparative assessment in five dimensions:

- Depth/Specificity – How specifically the sandbox targets AI in drug development/clinical trials.

- Scope of AI Experimentation – The breadth of AI use cases permitted (e.g. trial endpoints, design, recruitment, manufacturing).

- Maturity – The development stage of the sandbox (planned, pilot, or operational).

- Stakeholder Engagement – Level of involvement and transparency with industry, academia, and other stakeholders.

- Regulatory Impact – Influence of the sandbox on regulatory guidance, policy, or broader national AI strategy in biomedicine.

Tables with scoring (on a 1 to 5 scale, where 5 = highest/best) are provided to compare the six regulators across these dimensions, followed by qualitative analysis.

United States: FDA (Food and Drug Administration)

Sandbox Existence and Focus: The FDA does not label any program explicitly as an “AI regulatory sandbox” for drug development, but it has several initiatives that fulfill a similar role of enabling AI innovation under regulatory oversight. Notably, the FDA’s Center for Drug Evaluation and Research (CDER) launched the Innovative Science and Technology Approaches for New Drugs (ISTAND) Pilot Program in 2020 (fda.gov).

ISTAND is a pathway to qualify novel drug development tools that fall outside traditional qualification programs. It explicitly welcomes AI-based methods for drug development, including AI algorithms to evaluate patients, develop novel endpoints, or inform clinical study design (fda.gov). In January 2024, FDA accepted the first AI-based project into ISTAND – an AI-driven tool to measure depression and anxiety severity from multiple behavioral and physiological indices (fda.gov).

This tool uses a machine-learning model to derive clinician-reported outcomes (based on HAM-D/HAM-A scales) and represents a digital health technology (DHT) and AI/ML hybrid intended to aid clinical trials in neuroscience. The acceptance of this Letter of Intent marks a pioneering use of AI as a qualified methodology in drug development, illustrating FDA’s de facto sandbox approach of qualifying AI innovations for regulatory use.

Another sandbox-like FDA initiative is PrecisionFDA, a cloud-based collaborative platform established by FDA’s Office of Digital Transformation. PrecisionFDA provides a secure “sandbox” environment for data science and AI model development with over 7,900 users globally (fda.gov). It hosts community challenges (e.g. an AutoML “app-a-thon” in 2024) to explore how AI/ML can improve biomedical data analysis (fda.gov).

PrecisionFDA even supports fine-tuning generative AI models (LLMs) on biomedical datasets in a contained setting. While not specific to clinical trials, this sandbox accelerates regulatory science around AI by allowing innovators to test algorithms on FDA-provided data resources in a risk-controlled environment.

Key Features

The FDA’s ISTAND pilot functions as a limited-entry sandbox: it accepts a few novel proposals each year (2–4 annually during the pilot) to ensure manageability (fda.gov). Submissions are triaged based on public health impact and feasibility. Accepted tools undergo a three-step qualification process (Letter of Intent → Qualification Plan → Full Qualification) similar to traditional drug development tool qualification. The scope of experimentation is broad – ISTAND can cover digital trial endpoints, AI-driven patient selection or monitoring tools, novel trial designs (e.g. decentralized trials), and even nonclinical AI tools.

The duration is not fixed, but each project works through qualification stages with FDA feedback at each step. Eligibility focuses on tools with sound scientific rationale that address an unmet need in drug development and are feasible to implement. Regulatory involvement is high: FDA staff engage closely with sponsors to refine the tool and ensure it meets evidentiary standards for qualification.

In essence, FDA “joins the developer in the sandbox” by providing iterative guidance before the tool is formally used in a trial or regulatory submission. Notably, even prior to formal development, FDA expects good practices (like data management controls to mitigate bias) to be in place during the sandbox stage of AI model creation (thefdalawblog.com).

Use Cases and Pilots

The AI-based depression/anxiety severity tool is a concrete example of an AI in clinical trials use case progressed via ISTAND. By accepting this, FDA signaled willingness to consider AI-generated clinical outcome assessments (COAs) as valid evidence. Another ISTAND admission (Sept 2024) was an organ-on-a-chip model for liver toxicity prediction, showing the sandbox’s range from AI algorithms to microphysiological systems.

Beyond ISTAND, FDA has other innovation pilots relevant to AI: for instance, the Model-Informed Drug Development (MIDD) Pilot explores using AI/ML in clinical trial simulations and dose optimization.

FDA’s Center for Devices (CDRH) also ran an AI/ML Software Pre-Cert pilot (for Software as Medical Device), but that targeted devices rather than drug trials. However, cross-center efforts are converging: FDA published a joint centers paper in March 2024 on “Artificial Intelligence and Medical Products: How CBER, CDER, CDRH, and OCP are Working Together” (fda.gov), aiming for aligned regulatory approaches to AI across drugs, biologics, and devices. This coordination suggests sandbox learnings in one area (e.g. devices) may inform drug trial regulation and vice versa.

Stakeholder Engagement

The FDA has actively engaged industry, academia, and the public to shape its AI regulatory approach. In developing its 2025 draft guidance “Considerations for the Use of AI to Support Drug and Biological Product Regulatory Decision-Making,” CDER drew on extensive external input: an expert workshop in Dec 2022, over 800 public comments to a 2023 discussion paper, and a public meeting in Aug 2024 on AI principles.

This inclusive approach indicates that even without a single formal sandbox, FDA’s policy development has been sandbox-like, incorporating real-world stakeholder feedback and case studies into guidance. FDA’s PrecisionFDA challenges are open to global participants (industry, academia, individuals), and winners/participants often publish results, fostering a community of practice (fda.gov).

Additionally, transparency is built into ISTAND: accepted projects are publicly announced (with descriptions of the AI tool and its context of use), and the program follows 21st Century Cures Act transparency provisions (meaning qualified tools and summary reviews are made public).

This open sharing of lessons (e.g. publishing qualification opinions or white papers) engages the wider stakeholder community. Indeed, FDA has indicated that lessons from sandbox testing can inform broader guidance even before formal approvals (reports.weforum.org).

Outputs and Regulatory Impact

FDA’s sandbox-like initiatives have already begun to yield outputs that shape regulatory policy. The upcoming FDA draft guidance (2025) on AI in drug development is a direct outcome of these efforts, distilling best practices for industry (fda.gov). FDA also released a detailed Discussion Paper (May 2023, revised Feb 2025) on AI/ML in drug development (fda.gov), which serves as a de facto sandbox report, summarizing challenges and proposals for future regulation. In terms of concrete regulatory decisions, one milestone is that FDA is now seeing a surge of submissions with AI components (500+ submissions from 2016–2023).

The experience from reviewing these has informed internal governance: CDER established an AI Steering Committee and then an AI Council in 2024 to coordinate AI-related regulatory policy across the drug center (fda.gov).

Moreover, tools qualified via ISTAND can immediately be used by any drug sponsor without needing re-validation, streamlining innovative trial designs (this is the case once a DDT is fully qualified). For example, if the AI-based depression severity tool completes qualification, future clinical trials could incorporate it as an endpoint measure with confidence that FDA accepts its validity.

We also see broader strategic impact: FDA’s Commissioner has emphasized using AI to modernize trial design and review processes as part of the agency’s vision. Thus, the FDA’s approach – while not a single sandbox program – has baked AI sandbox principles into its regulatory science framework, influencing guidance, reviewer training, and policy alignment across centers.

Influence on National Strategy

The FDA’s AI initiatives align with the U.S. strategy of maintaining leadership in biomedical innovation. By embracing pilot programs and collaborative sandboxes like PrecisionFDA, FDA provides a pathway for AI innovators to contribute to drug development under regulatory supervision, which in turn helps the agency develop appropriate oversight tools. The United States has thus far avoided a separate AI regulatory regime, relying on FDA’s existing authorities and flexible pilots.

This incremental, science-driven sandbox approach supports the broader national AI strategy by ensuring that AI advances in biomedicine can be integrated into regulatory processes without compromising safety.

The FDA explicitly acknowledges that “AI will undoubtedly play a critical role in the drug development life cycle” and is developing a risk-based framework to both promote innovation and protect patients (fda.gov). The sandbox efforts are directly feeding into this framework, as evidenced by cross-agency publications and new draft guidances informed by sandbox learnings.

In summary, FDA’s use of collaborative sandboxes and pilots is shaping national regulatory standards for AI in drug development, balancing agility and rigor, and reinforcing the U.S. position in advancing AI-enabled healthcare.

European Union: EMA (European Medicines Agency)

Sandbox Existence and Status

At the EU level, a formal regulatory sandbox for AI in medicinal product development is in the proposal stage, embedded in the sweeping revision of the EU pharmaceutical legislation (unveiled by the European Commission in April 2023). The proposed Regulation introduces the concept of a “regulatory sandbox” for medicinal products. If adopted, this would create a structured, time-limited framework where novel technologies – including AI-driven drug development approaches or clinical trial methods – can be tested in a real-world environment with regulatory safeguards.

While the sandbox concept is not yet operational (the legislation is still under debate as of 2025), its inclusion signals a major shift in EMA’s approach to enabling breakthrough innovation (like digital trials, AI analytics, etc.) that current rules don’t fully accommodate.

Notably, the sandbox would be available only for products regulated as medicinal products (drugs or biologics), ensuring a pharma focus. The legislative draft explicitly cites “deployment in the context of digitisation” – implying digital health and AI applications in drug development – as targets for sandbox trials.

In anticipation of this future sandbox framework, the EMA and the European medicines regulatory network have been laying groundwork. The EMA’s Network Data Steering Group (NDSG) adopted a Multi-Annual Workplan 2025–2028 that emphasizes AI in four key areas: guidance, tools, collaboration, and importantly “experimentation – ensuring a structured and coordinated approach” (ema.europa.eu). This reflects a sandbox mentality: coordination across EU regulators to experiment with AI in a structured way.

Currently, no dedicated EMA-run sandbox program is active specifically for AI in clinical trials, but mechanisms like EMA’s Innovation Task Force (ITF) and Scientific Advice procedures allow sponsors to discuss innovative AI methodologies case-by-case. Moreover, EMA already has a process to qualify novel methodologies (similar to FDA’s DDT qualification). Through this, EMA achieved a milestone in 2025: its first qualification opinion on an AI-based methodology for drug development.

In March 2025, the CHMP issued a qualification opinion accepting clinical trial evidence generated by an AI tool (the “AIM-NASH” tool for analyzing liver biopsy images in NASH/MASH trials) as scientifically valid. This is effectively sandbox output – the tool was evaluated in a controlled pilot context (including a public consultation on its draft opinion) and then formally recognized for use in trials. Such ad hoc “pilot” evaluations serve as precursors to a formal sandbox: EMA is demonstrating willingness to engage with AI innovations before the new legal sandbox framework even comes into force.

Key Features of Proposed Sandbox

The EU pharmaceutical law proposal (Articles 113–115) outlines how an EMA sandbox would operate. Scope of experimentation: very broad, but with strict entry criteria. A sandbox can be established only if (a) the innovative product or approach cannot be developed under existing regulations (i.e. something about it doesn’t fit current rules), and (b) it likely offers a significant advance in quality, safety, or efficacy or a major advantage in patient access.

This means sandboxes are reserved for truly novel AI-driven products or trial designs that break the regulatory mold and promise big benefits. Initiation and duration: EMA would proactively monitor emerging technologies and, when it spots a candidate, can recommend the European Commission to set up a sandbox.

The sandbox is created by a Commission decision, legally binding, and time-limited (with a possibility to extend if needed). Sandbox plan: EMA’s recommendation must include a detailed plan specifying the product(s) involved, the scientific/regulatory justification, which regulatory requirements need to be waived or adjusted, risk mitigation measures, and the sandbox’s duration. In effect, this plan is the governance blueprint – it may, for example, allow a novel AI-based trial endpoint to be used in lieu of a traditional endpoint, with predefined safeguards and data collection requirements.

Crucially, regulatory involvement and safeguards are built in. Even within the sandbox, the product is “contained” – it can be used in trials but cannot be commercially marketed until it obtains full marketing authorization. National regulators (e.g. ethics committees and national competent authorities for clinical trials) must consider the sandbox plan when approving trial applications, ensuring alignment. The Commission’s sandbox decision can also permit temporary derogations from certain EU pharmaceutical requirements for the sandbox product, but only those strictly necessary for the experiment’s goals.

For instance, if an AI-driven dosing algorithm doesn’t fit the usual GCP framework, specific GCP rules might be waived or supplemented under defined conditions. These regulatory concessions are narrow and justified, and they would be reflected as conditions in any eventual marketing authorization.

In terms of eligibility and participants, the sandbox could potentially cover a single product or a category of products. EMA is expected to engage not just the product sponsor but also independent experts, clinicians, and even patients as needed, indicating a broad stakeholder involvement in design and oversight of each sandbox experiment.

Use Cases and Pilots: Since the EMA’s sandbox is pending, concrete “in-sandbox” use cases are limited to pilots under existing frameworks. The AIM-NASH tool is a prime example: it was developed by industry (a company named HistoIndex with academic collaborators) and underwent EMA’s qualification procedure. The tool employs AI to interpret histological images, potentially enabling smaller or shorter trials in NASH by providing more sensitive measures of treatment effect.

After iterative assessment and a draft review open for public comment, EMA’s CHMP formally qualified it – the first time EMA accepted AI-generated trial data as valid (ema.europa.eu). This sets a precedent for future AI tools (e.g. AI for imaging endpoints, digital biomarkers, or patient selection algorithms) to be qualified. Another near-sandbox activity is EMA’s regulatory science research via public-private partnerships.

For instance, the IMI (Innovative Medicines Initiative) has funded projects on AI in drug discovery and trials; while not regulated by EMA, they often involve EMA as an observer, and results (such as methodologies for adaptive trials using AI) feed into EMA discussions.

The EMA’s Innovation Task Force (ITF) has also likely discussed AI-based trial proposals – the ITF is a forum where sponsors of cutting-edge technologies (including AI-based trial designs or AI-derived evidence) get preliminary feedback from EMA. Though confidential, the growing number of AI inquiries at ITF is referenced in EMA’s Reflection Paper on AI (published in late 2024). That reflection paper provides insight into use cases across the medicinal product lifecycle – from drug discovery to clinical trial data analysis – and how EMA views them.

One specific collaborative sandbox-like project is the DARWIN EU initiative – not AI-focused per se, but a federated real-world data network under EMA. If AI tools are used to analyze real-world data for regulatory questions, DARWIN EU could act as a sandbox for validating those AI algorithms in pharmacovigilance or epidemiology contexts. While DARWIN EU deals with RWD, it aligns with the idea of controlled environment for new data approaches.

In summary, until the formal sandbox launches, EMA’s “pilots” consist of qualification opinions (like AIM-NASH) and proactive guidance development for AI. These efforts simulate sandbox outcomes by letting one-off cases blaze a trail that general policy can later follow.

Stakeholder Engagement: The EMA has emphasized transparency and multi-stakeholder input in its AI readiness efforts. The draft EU sandbox framework itself mandates that EMA engage with developers, experts, clinicians, and patients in monitoring emerging innovations. Even now, EMA’s process for qualifying novel methodologies includes public consultation, as seen with the AIM-NASH tool (public comments were solicited in late 2024 on the draft opinion).

EMA’s Reflection Paper on AI in the medicinal product lifecycle (adopted by CHMP in Sept 2024) was developed by the Methodology Working Party with Big Data Steering Group support, likely incorporating feedback from industry and academia who participated in workshops or provided comments.

Additionally, EMA and the Heads of Medicines Agencies (HMA) have jointly run conferences and surveys on “Big Data” and AI since 2019, ensuring broad input. One noteworthy engagement tool is the EMA/HMA Artificial Intelligence Task Force (recently the Big Data Steering Group evolved into NDSG focusing on AI, data, etc.).

They published guiding principles for staff use of AI (like large language models) in 2024, which, while internal, were made public – signaling openness about how regulators themselves use AI. This internal upskilling also involved international collaboration: EMA, FDA, and other regulators exchange best practices through forums like ICMRA (International Coalition of Medicines Regulatory Authorities).

Industry and patient groups are also being involved in sandbox discourse. For example, the European Federation of Pharmaceutical Industries and Associations (EFPIA) in 2023 supported the inclusion of sandboxes, seeing them as essential for AI innovation in medicines (efpia.eu). The sandbox concept has been generally welcomed by pharma companies as it promises earlier interaction with regulators on novel tech. In terms of transparency, any sandbox established in the EU will have publicly documented rules (via Commission decision) and outcomes (one can expect summary reports or even publications on lessons learned).

This is intended to allow the “learnings to inform future changes to EU medicines rules”, a sentiment explicitly stated by the Commission. Thus, stakeholder engagement is both upfront (in designing and running the sandbox) and downstream (in diffusing the knowledge gained).

Outputs and Regulatory Impact: Though the sandbox is not yet operational, we already see sandbox-driven regulatory outputs emerging in the EU. The AI reflection paper (2024) is a high-level guidance to medicine developers on using AI throughout R&D. It signals regulators’ expectations (e.g. need for transparency, data quality, human oversight in AI) and will likely evolve into more formal guidance once sandbox experiences accumulate.

Another output is the CHMP qualification opinion for AIM-NASH (2025) – a concrete regulatory endorsement of an AI tool, which now allows any sponsor to use the AIM-NASH methodology in NASH clinical trials with confidence that EMA accepts it. This is analogous to FDA’s qualification: it effectively standardizes an AI approach for broader use.

The process of developing that opinion also highlighted important considerations (public comments raised issues of algorithm bias and generalizability, which EMA had to address). Those considerations feed into ongoing policy (for instance, they underscore why human pathologist oversight was required – the tool was accepted as AI-assisted, not fully autonomous, aligning with a risk-based stance).

Looking ahead, the greatest regulatory impact of the sandbox will be adaptation of the EU regulatory framework. The Commission envisions that data from sandbox experiments could support real-world evidence in approvals and lead to “adaptive or more flexible approaches to marketing authorization routes”. For example, a successful sandbox trial might enable adaptive licensing (staged approvals) for a drug, or pave the way for guidance on using AI-derived endpoints in pivotal trials.

In fact, the draft law even includes that if a product is authorized while still in a sandbox, its initial marketing authorization is time-limited to the sandbox duration – a novel regulatory tool to closely monitor such products. This shows the sandbox’s influence: it is pushing regulators to rethink traditional authorization validity, labelling (products developed in a sandbox would state this in their product info), and pharmacovigilance oversight within sandboxes.

Influence on National/Regional Strategy

The concept of AI sandboxes is also reflected in Europe’s broader AI policy. The EU’s AI Act (expected to come into force around 2025) will require each Member State to establish at least one AI sandbox, which could include health applications. While the AI Act sandboxes are horizontal (not limited to medicines), this will create an ecosystem of experimentation across Europe.

Some EU countries are already piloting such sandboxes (e.g. Spain and Germany have general AI sandboxes, and France’s health regulator has discussed “living labs” for digital health). The EMA’s sandbox, once live, will complement these by focusing on medicinal AI. It positions the EU to respond to innovation earlier in the product lifecycle rather than reacting when a product is ready for approval.

Strategically, this aligns with the “EU Accelerating Clinical Trials (ACT) Initiative” goals to modernize trials and with the European ambition to be competitive in AI-driven healthcare innovation. By embedding the sandbox in law, the EU shows a commitment to regulatory agility – a necessary counterbalance to the often criticized slow EU approval processes. If successful, the sandbox could become a model for global regulation, reinforcing the EMA’s influence on international guidelines (ICH etc.) in incorporating AI.

In summary, although Europe’s sandbox is nascent, it is a cornerstone of the EU’s biomedical AI strategy – aiming to marry the EU’s rigorous safety standards with a more innovation-friendly posture by providing controlled real-world testing before full approval.

United Kingdom: MHRA (Medicines and Healthcare products Regulatory Agency)

Sandbox Existence and Focus

The UK’s MHRA launched an AI-focused regulatory sandbox pilot known as the “AI Airlock” in spring 2024 (gov.uk). This is the MHRA’s first sandbox and is explicitly dedicated to AI as a Medical Device (AIaMD) products. While the emphasis is on medical devices (including software), rather than drugs, it directly addresses AI technologies that often intersect with clinical trials and treatments – for example, AI diagnostic tools or AI-driven decision support systems used alongside drug therapies.

The AI Airlock is a pilot program designed to test and improve the regulatory framework for AI-powered medical devices so that they can reach patients safely and efficiently (gov.uk).

By its nature, this sandbox involves evaluating how companies can generate evidence of safety and performance for AI tools that learn and adapt, a challenge highly relevant to AI in clinical settings. Importantly, the UK explicitly labels AI Airlock as a regulatory “sandbox” – a safe space for manufacturers and regulators to experiment with evidentiary approaches under supervision. This pilot, though not about drug products per se, covers AI innovations that could be used in clinical trial contexts (for instance, AI diagnostic algorithms to select patients or AI imaging endpoints).

The UK’s selection of this domain reflects where they see urgent need: regulating adaptive AI systems (which can update as they learn) requires new oversight models, and a sandbox allows the MHRA to refine these models in collaboration with developers.

Key Features: The AI Airlock’s scope is currently limited to a small number of AIaMD products across diverse clinical areas, to capture a range of regulatory challenges. In the 2024 pilot cohort, five innovative AI technologies were selected from an open call for applications. These include AI devices for cancer diagnosis, chronic respiratory disease management, and radiology diagnostics, among others.

Each chosen product represents a unique use case (e.g. an AI for reading medical images, an AI for predicting disease exacerbations) and is associated with a specific regulatory question – such as how to validate an AI that continuously learns, or how to assure safety when an AI aids in clinical decisions. The sandbox duration for the pilot phase was roughly one year (it “ran until April 2025”). During this time, manufacturers worked under MHRA oversight, largely in virtual or simulated trial settings (no patients were put at risk) to figure out the best ways to collect evidence for their AI devices’ performance.

The sandbox thus did not grant market approval, but allowed testing methods in conditions mimicking real use.

Eligibility criteria for the AI Airlock were clearly defined: applicants had to show their AI device was novel, had potential significant patient/NHS benefits, and posed a regulatory challenge that was ready to be addressed in the sandbox. The aim was to pick cases that would be most informative for future rulemaking. Regulatory involvement: MHRA staff (and partner bodies) worked side-by-side with the companies. The sandbox is inherently collaborative – involving UK Approved Bodies (which certify devices for UKCA marking), the NHS (the end-user setting), and possibly other regulators like the Information Commissioner’s Office for data aspects.

This multi-organization team helped identify pitfalls and solutions. For example, if an AI’s training data might be unrepresentative, regulators and NHS experts would advise on additional data needed. Regulatory safeguards: All activity is under MHRA supervision, and no sandbox participant is exempt from ensuring patient safety. The Airlock is more about exploring evidence generation than waiving safety requirements. Indeed, MHRA describes it as a “type of study” where evidence collection strategies are explored under oversight. By the end of the pilot, the MHRA expected to compile findings on what worked and what regulations might need changing.

Use Cases and Outcomes: The five selected AI technologies illustrate the sandbox’s practical value. Although MHRA hasn’t publicly named all five, they noted inclusion of AI for oncology and respiratory care, and radiology AI. One can infer examples: perhaps an AI that reads CT scans for lung cancer, an AI that analyzes chest sounds for COPD patients, or an algorithm predicting chemotherapy responses. Each of these would face different regulatory questions (diagnostic accuracy validation, reliability across populations, etc.). Through the Airlock, these companies might, for instance, run a simulated clinical trial using retrospective patient data to see if their AI’s performance holds up, or test how often the AI model needs updating before deployment.

A concrete outcome expected in 2025 is a report of findings. MHRA announced that findings from the pilot, due in 2025, will inform future projects and influence AI Medical Device guidancegov.uk. For example, one anticipated impact is on how MHRA works with Approved Bodies for UKCA marking of AI devicesgov.uk. If the sandbox showed that current standards are too rigid or unclear for AI, MHRA can refine guidance on evidence requirements for AIaMD. It’s also expanding: by March 2025, seeing early positive results, the government committed funding for Phase 2 of AI Airlock in 2025–26.

Phase 2 opened for new applications in mid-2025, aiming to incorporate more products and perhaps tackle additional issues. This indicates the pilot’s success in generating interest and insights (indeed, receiving more applicants than could be admitted).

Stakeholder Engagement

The UK has been very open and collaborative in this sandbox initiative. Industry engagement began with an industry-wide call for applications in Autumn 2024. Clear criteria and timelines were published, and multiple companies applied, evidencing strong interest. The selection was transparent, and the MHRA even held a public webinar (June 19, 2025) to discuss the pilot outcomes and the next phase. This webinar (hosted on MHRA’s YouTube channel) allowed broader stakeholders to hear insights and ask questions, underscoring transparency.

Healthcare system involvement is a standout feature: by partnering with the NHS (the world’s largest integrated healthcare system), the sandbox ensures that practical considerations like workflow integration and clinician acceptance are part of the evaluation.

Senior UK officials have been actively championing the sandbox. The Minister of State for Health highlighted that this project aligns with the UK’s 10 Year Health Tech plan to digitize the NHS, bringing in promising AI to benefit patients. The Science Minister similarly praised the Airlock as “government working with businesses” to turn innovation into reality, exemplifying high-level political support.

Such endorsements show that the sandbox is integrated into national innovation policy and that the UK is using it as a tool to attract AI developers (creating a pro-innovation regulatory climate).

Internationally, the MHRA’s sandbox has positioned the UK as a leader: a June 2025 press release noted the UK is the first in a new “global network” on the safe use of AI in healthcare. This likely refers to a coalition of regulators sharing sandbox experiences. By spearheading AI Airlock, the MHRA can influence global standards and ensure UK perspectives shape how AI is governed worldwide.

Outputs and Regulatory Impact

Although the pilot has just ended, it has already started influencing UK regulatory strategy. For one, the MHRA’s broader medical device regulations reform (post-Brexit, the UK is updating its device regulations) will incorporate learnings from the AI Airlock. The sandbox is essentially a testbed feeding directly into policymaking. For instance, MHRA indicated that findings will likely guide new UK AI Medical Device guidance – presumably refining requirements for adaptive algorithms, real-world performance monitoring, etc., in upcoming regulatory publications.

The sandbox also informs how MHRA interacts with conformity assessment bodies: MHRA foresees more tailored oversight of Approved Bodies when they assess AI devices, ensuring they use the insights on what evidence truly demonstrates safety for AI.

In short, the Airlock is generating a knowledge base that will shape regulatory guidances, standards, and possibly even legislation (the UK might introduce specific provisions for AIaMD based on this experience).

Another tangible output is increased regulatory capacity. By dealing hands-on with five AI products, MHRA staff and partners have built expertise in AI evaluation. They identified pain points – e.g., how to document algorithm changes or how to validate an AI’s output against clinical outcomes. This expertise is being consolidated in internal MHRA documentation and training, which will benefit future AI product submissions.

Moreover, the sandbox has a signaling effect: it tells AI innovators worldwide that the UK is open for business for novel health AI under an enabling regulator. A likely impact is more AI startups choosing the UK for early clinical deployment, knowing there’s a supportive sandbox. Indeed, £1 million in funding was allocated to the program, reflecting confidence that this will yield economic and health returns.

In summary, while specific “white papers” from the sandbox are not yet out (as of mid-2025), the expected 2025 sandbox pilot report and subsequent Phase 2 will directly inform revised MHRA guidelines (or even statutory requirements) for AI in healthcare. The sandbox’s iterative nature (with more phases planned) means it will continue to be a driving force in UK regulatory innovation.

Influence on National Strategy

The AI Airlock aligns tightly with the UK’s national strategy to be a global leader in life sciences and AI. Post-Brexit, the UK has been keen to demonstrate a nimble regulatory regime that can adapt faster than the EU’s. The sandbox exemplifies this agility. It is part of the government’s vision (as ministers articulated) to transform the NHS with digital technology. By ensuring AI can be validated and adopted safely, the sandbox supports the NHS Long Term Plan’s goals of earlier diagnosis and personalized care.

Economically, it supports the UK’s aim to attract AI companies – the sandbox model has shown to increase investor confidence (UK officials cited fintech sandbox successes where participants saw 40% faster time-to-market). So this feeds into the UK’s innovation economy. Furthermore, the MHRA has historically been influential internationally; with AI Airlock, it has a platform to shape global regulatory principles for AI. Indeed, MHRA is sharing its sandbox experience via international regulatory forums and was noted as the first regulator in a global AI sandbox network.

This boosts the UK’s soft power in setting standards for AI in medicine. In summary, the sandbox is both a tool for domestic regulatory evolution (feeding into guidance and ensuring UK patients benefit from AI sooner) and a pillar of the UK’s international AI strategy, showcasing a balanced approach to innovation and safety that the UK can champion on the world stage.

Switzerland: SwissMedic

Sandbox Existence and Focus: SwissMedic currently does not have a dedicated regulatory sandbox program for AI in drug development or clinical trials. Unlike some of its peers, SwissMedic has not (yet) established an external sandbox inviting companies to test AI innovations under agency oversight. However, Switzerland’s approach to AI regulation is evolving in 2025, and there are important context pieces to consider. The Swiss Federal Council in Feb 2025 announced a national approach to AI regulation that favors integrating AI into sector-specific laws, rather than a single AI Act.

This means any framework for AI in healthcare will be woven into existing medicinal product and device regulations. Within this strategy, the idea of innovation-friendly measures is prominent. While not explicitly called “sandboxes,” Switzerland emphasizes non-legally binding measures (like guidelines or industry agreements) and potentially new institutional support to ensure AI can be developed responsibly without stifling innovation.

At SwissMedic itself, the agency pursued an internal innovation sandbox of sorts: the “Swissmedic 4.0” digital initiative (2019–2025). This was essentially an internal innovation lab with a six-person team tasked to explore digital transformation, AI, data science, and agile methods within the agency’s operations (swissmedic.ch). Swissmedic 4.0 functioned as a sandbox for internal processes – questioning everything, trying prototypes, and seeing what might improve regulatory workflows.

For instance, the team developed and implemented a digital risk assessment tool for medical devices (using probabilistic modeling) which enabled faster decision-making by reviewers. They also introduced AI-based tools to alleviate repetitive tasks and held trainings to improve staff data literacy and safe use of AI tools. While this was not a sandbox for external stakeholders, it indicates SwissMedic’s recognition of AI’s importance and its attempt to cultivate expertise in-house.

Innovation Initiatives and Key Features

Because SwissMedic does not run an external sandbox, companies developing AI for drug development in Switzerland have no special regulatory safe harbor beyond the standard pathways. However, there are some Swiss innovation initiatives around AI that indirectly support regulatory goals. For example, the Canton of Zürich launched an “Innovation Sandbox for AI” in 2021, which completed five pilot projects by 2024 (greaterzuricharea.com).

This was a regional program (not specific to medicines) aiming to test AI solutions in areas like healthcare, and it involved developing regulatory expertise and trust through practical experiments (zh.ch). One project reportedly dealt with AI in clinical decision support, collaborating with regulators on privacy compliance. The lessons from such regional sandboxes feed into national discussions and help Swiss regulators understand AI’s challenges.

Another relevant development is the proposal (floated by experts) that Switzerland establish a “Swiss Digital Health and AI Competence Center” with the ability to certify AI medical technologies and offer regulatory sandboxes for life sciences. The argument is that Switzerland, to stay competitive, could create a specialized authority that streamlines approvals for AI in healthcare and even provides sandboxes for real-world testing before full approval.

This has not been implemented, but it reflects serious consideration in Switzerland of the sandbox concept as a tool to attract innovation and ensure safety. If such a sandbox were created, it might allow, for instance, an AI-driven diagnostic or an AI-guided dosing regimen to be tried in a controlled Swiss clinical study with regulatory waivers – giving Swiss patients early access and SwissMedic early insight.

Use Cases and Engagement

In absence of a formal sandbox, SwissMedic’s engagement with AI in drug development has been through case-by-case interactions and international cooperation. SwissMedic participates in the Access Consortium (with Canada, Australia, Singapore, etc.), which has working groups on new technologies – presumably sharing AI-related evaluation approaches (swissmedic.ch). It’s also a member of ICMRA, which in 2022 published a horizon scanning report on AI in medicines regulation.

These forums act as “meta-sandboxes” where SwissMedic can learn from others’ pilots (like FDA’s or MHRA’s) and prepare for similar scenarios. For example, if a Swiss company developed an AI for clinical trial patient stratification, SwissMedic would likely consult these international benchmarks when deciding how to handle it.

Domestically, SwissMedic has shown openness to dialogue. It regularly hosts or attends conferences (the “Regulatory Science Meetings” in Switzerland have discussed AI). The Regulatory & Beyond 2024 report by SwissMedic’s Director mentions exploring how AI can be used in pharma and medtech regulation (swissmedic.ch). This hints that SwissMedic is aware of AI’s potential in trial design, pharmacovigilance, etc., and is reviewing how it might fit within current laws.

Stakeholder Interaction

SwissMedic’s stakeholder engagement on AI has been less public compared to FDA or EMA, largely because no formal consultation on an AI sandbox has occurred yet. However, SwissMedic’s public communications suggest it values stakeholder input for innovation. For instance, during Swissmedic 4.0, they held events and internal seminars featuring “real-life cases” of AI and machine learning, and engaged in dialogue with international organizations (EMA, FDA) to import best practices. This indicates SwissMedic staff were actively learning from external stakeholders, if not directly from industry in a sandbox setting, then from sister agencies and global experts.

Moreover, the Swiss government’s approach to AI includes consultations with industry and academia. The Federal Council’s strategy leans on non-binding measures like self-disclosure agreements or industry solutions – which implies the government might encourage industries (like pharma) to come forward with how they ensure AI is safe/ethical, rather than impose strict new rules initially. This collaborative approach could eventually manifest as a sandbox-like partnership if a particular AI application (say, an AI to analyze clinical trial data across hospitals) needed testing with regulator oversight.

Outputs and Impact

Even without a sandbox, SwissMedic has made progress in modernizing its framework. One measurable outcome of Swissmedic 4.0 is that the agency is now more digitally capable – which ultimately benefits how it will handle AI submissions. The initiative’s results (digital tools integrated, agile methods adopted) were rolled into SwissMedic’s regular operations as of mid-2025. This internal transformation means SwissMedic is arguably more ready to evaluate AI-driven dossiers or to monitor AI in post-market settings.

Regarding formal guidance or policy on AI in drug development, Switzerland often aligns with EU standards to avoid a “Swiss finish” (unnecessary extra requirements). For example, for pharmaceuticals, SwissMedic follows ICH guidelines that implicitly cover computer-assisted methods, and is likely to follow EMA’s lead on any AI-specific guidance. In medical devices, SwissMedic has recognized that legislative changes will be needed to address AI (since Switzerland’s device law mirrors EU MDR but the EU AI Act will not directly apply).

The Federal Council’s sectoral analysis pointed out that SwissMedic’s market surveillance activities for AI devices need strengthening and that device law will require updates for AI. This likely foreshadows Swissmedic issuing guidelines or regulations on continuous learning algorithms or real-world performance data. A sandbox could be one method to develop those guidelines, as suggested by commentators, but until then SwissMedic may rely on requiring case-by-case evidence (for instance, expecting AI device makers to provide data on algorithm retraining effects as part of their approval, even without a sandbox).

Influence on National Strategy

Switzerland’s stance is to be pro-innovation while safeguarding trust and fundamental rights. In that light, SwissMedic might not lead with a sandbox, but the concept is on the table as a competitive edge. If Switzerland sees the UK and EU benefiting from sandboxes, it could move to create its own niche sandbox to attract AI-driven trials or digital therapeutics developers. Notably, Switzerland hosts a strong pharma industry and AI research community; a sandbox could strengthen its ecosystem. The Sidley analysis in April 2025 highlights that Switzerland could differentiate itself by establishing a fast-track AI/digital health authority with sandbox capabilities, to reduce reliance on foreign approvals.

This suggests that providing a sandbox is viewed as a way to keep Switzerland attractive for novel drug development (preventing innovators from bypassing Switzerland in favor of the U.S. or EU).

For now, SwissMedic appears to focus on aligning with global frameworks – meaning if FDA or EMA validates an AI approach, SwissMedic is likely to accept it too, expediting Swiss access to innovation without needing its own sandbox pilot. Additionally, Switzerland’s participation in international harmonization (like IMDRF for devices, ICH for drugs) ensures its policies on AI won’t stray far. In summary, Switzerland’s immediate strategy is cautious integration of AI into existing processes (with internal upskilling via Swissmedic 4.0), but it’s contemplating bolder moves (like sandboxes or a specialized agency) to remain an innovation-friendly jurisdiction.

The coming years (post-2025) will show whether SwissMedic establishes a formal sandbox; until then, its influence comes from contributing to, and learning from, other regulators’ sandbox experiences to update its own regulations pragmatically.

Israel: Israel Ministry of Health (Medicines and Medical Devices Division)

Sandbox Existence and Plans: Israel is in the process of launching a regulatory sandbox for medical AI in healthcare, with particular relevance for clinical trials and drug development contexts. As of mid-2025, the Israeli Ministry of Health (MOH), in partnership with the Israel Innovation Authority, has invited input on pioneering AI projects in healthcare as a prelude to setting up a “regulatory sandbox”.

This indicates that a sandbox is planned but not yet operational. The initiative appears to be a government-backed effort to create a controlled environment where innovative AI solutions can be tested in the healthcare system with regulatory guidance. In Israel’s highly tech-driven environment (often dubbed the “Startup Nation”), this sandbox is seen as a way to balance rapid progress in AI with patient safety and ethics.

While the sandbox’s exact governance structure is still being defined (the MOH issued a Call for Information to stakeholders on how best to implement it), its conceptual focus includes AI in clinical settings beyond decision support – potentially AI that automates or significantly changes medical tasks. This could cover, for instance, AI-designed treatment protocols, AI-based patient monitoring during trials, or AI analysis of trial data to identify endpoints. Israel explicitly drew inspiration from fintech sandboxes, which have been successful in fostering innovation under regulator oversight.

The MOH envisions a sandbox enabling “advanced AI solutions that may fully automate certain medical tasks or significantly change healthcare workflows” to be trialed, while addressing current regulatory limitations.

Notably, Israel’s sandbox discussion (innovationisrael.org.il) is occurring alongside the development of a National AI Strategy (the government has a national AI program aiming to maintain Israel’s leadership in AI). In healthcare, the sandbox is a flagship component of that strategy, acknowledging both the opportunities and sensitivities of AI in medicine.

Key Features (Proposed)

The Israeli sandbox is described as a controlled setting with minimized risks and support to develop appropriate regulations. We can infer some features from the call for information and related commentary:

Scope of experimentation

likely broad within healthcare – from AI in diagnostics and clinical decision support to AI assisting in clinical trials (e.g. patient recruitment algorithms or AI for analyzing trial results). It must address privacy of patient data, safety and efficacy of treatments, and strict compliance with regulations. This implies the sandbox might include projects like using AI on large patient datasets (with privacy safeguards) or testing AI-driven treatment recommendations within a hospital in parallel to standard care (ensuring no harm).

If a pharma company wanted to test an AI that predicts which trial participants are responding to a drug, the sandbox could allow that in a study with MOH oversight before such AI is formally approved.

Duration and structure

Not defined yet, but likely time-bound pilots for each project. Israel might run it as a rolling program where cohorts of AI projects enter for a fixed period (say 6-12 months) to demonstrate proof-of-concept under supervision.

Eligibility and selection

To be determined, but given Israel’s focus, they may prioritize projects with high impact on healthcare quality or efficiency, and which are at a stage to benefit from real-world testing but need regulatory clarity. The Innovation Authority’s involvement suggests they will integrate this with their support for startups – potentially funneling promising health-AI startups into the sandbox program.

Regulatory involvement

High. The sandbox will be led by the MOH (which regulates drugs, devices, healthcare services in Israel) with input from domain experts. Israel plans to involve experts in clinical practice, technology, and ethics to support the sandbox (gov.il). So a given AI pilot might have a steering committee including MOH regulators, hospital clinicians, AI scientists, and ethicists to monitor progress and advise on needed safeguards. This multi-disciplinary oversight is crucial especially because Israel is very sensitive about data ethics (given its strong data protection regime and the presence of diverse communities).

Risk management The sandbox is explicitly aimed to “manage risks and refine regulations” (gov.il). We can expect strict protocols such as informed consent if patients are involved, continuous monitoring, and perhaps the ability to halt a project if any safety concern arises (as a sandbox is not a no-rules zone, but a modified-rules environment). The MOH likely will set conditions on each experiment, e.g., an AI can be tested on retrospective data or in a limited clinical pilot with extra checks.

Use Cases and Potential Pilots

Although the sandbox hasn’t started, we can speculate on the kind of AI projects Israel might include, especially given its robust digital health sector:

Clinical Trial Optimization

Israel might sandbox AI for clinical trial design – for example, an AI system that proposes adaptive trial protocols or identifies optimal patient stratification using historical data. A sandbox pilot could simulate a trial design process where the AI’s suggestions are evaluated against traditional designs, under MOH guidance, to see if regulations on protocol approvals might be adjusted to allow AI-generated designs.

AI in Diagnostics/Treatment to support drug use

Israel has companies working on AI for medical imaging, pathology, etc. If, say, an AI diagnostic is intended to identify which patients should get a certain therapy (thus directly affecting drug use in trials or practice), the sandbox could test this in a controlled manner at a hospital. This intersects drug development as it could be used in trials to select patients more likely to respond, thus an AI companion diagnostic for a drug could be sandboxed.

Digital therapeutics or AI-driven interventions

Possibly AI systems that provide therapeutic recommendations (like dosage adjustments) could be tried with clinicians in the loop to measure outcomes. If a company has an AI that adjusts cancer drug dosing in real-time based on patient data, a sandbox might allow a small-scale study of that AI’s recommendations vs. doctor’s standard dosing, with oversight.

No specific pilot has been publicly named yet. However, the initiative’s framing suggests they are open to a wide range of projects as long as they push boundaries (the mention of “fully automate tasks” hints they are looking at cutting-edge AI, not just decision support). This could even include AI in manufacturing or supply chain (less likely in initial phase, but if someone proposes an AI to control a drug manufacturing process, that’s another regulatory area to test).

Stakeholder Engagement

Israel’s MOH has started engaging stakeholders by issuing a Call for Information (essentially a public consultation). This request asks academia, industry, healthcare providers, and others to share their ideas and concerns regarding a medical AI sandbox. Such early engagement ensures the sandbox design will be informed by those who will use it. The involvement of the Israel Innovation Authority is also key – this agency brings in startups, tech companies, and investors. They likely will sponsor workshops or roundtables between regulators and innovators to hash out sandbox procedures.

Israel has a culture of strong academia-industry-government collaboration in tech, often via national programs. For example, the Israel Digital Health Initiative (a few years back) connected hospitals with startups to pilot technologies. The sandbox will build on that by adding the regulator into the mix from the start. We can expect that leading medical centers (like Sheba Medical Center, which has an innovation hub) will be involved, offering sites for sandbox trials. Also, Israel’s ethical and legal experts (some of whom have been vocal in local media about AI in medicine) will be part of the conversation, aligning with the noted inclusion of ethics experts (gov.il).

Transparency should be high: Israeli regulators will likely publish guidelines and results from sandbox projects. In fintech, the Israeli Securities Authority had a fintech sandbox that publicly reported outcomes. For the health AI sandbox, given public interest in health, the MOH will presumably share summaries of what was learned and any regulatory changes considered.

Outputs and Expected Impact: Since the sandbox is at inception, no outputs (like guidelines or approvals) have yet come out of it. However, the explicit goal is to develop appropriate regulations based on sandbox findings. This could result in Israel issuing, for example, new guidance on approval of AI-based medical devices or updating Good Clinical Practice (GCP) guidelines to accommodate AI interventions in trials. In other words, Israel is aiming for “regulation by learning” – using sandbox evidence to shape the rules.

A likely early output might be a white paper or report summarizing the first cohort’s activities and recommending specific regulatory adjustments (e.g., clarifying how to validate an adaptive AI algorithm, or how to certify AI software as a combination product with a drug).

Another impact is on policy: The sandbox is part of a wider push for Israel to remain a global health tech leader. The government’s National Program for AI mentions creating tools and environments to facilitate AI development (innovationisrael.org.il). If the sandbox is successful, it could lead to establishing permanent infrastructure – perhaps even a dedicated Medical AI Unit in the MOH that continuously works with industry on emerging tech (akin to an “AI center of excellence” within the regulator). Also, sandbox learnings might influence Israel’s stance in international standards.

Israel often adopts FDA/EU standards but also contributes to bodies like IMDRF. If the sandbox uncovers, say, a novel metric for AI performance, Israel could propose that in global discussions.

Influence on National Strategy

Israel’s interest in a regulatory sandbox is driven by the need to maintain its innovative edge and ensure public trust in AI in health. The country is known for rapid digital health innovation (e.g., AI startups like Zebra Medical, Aidoc) and a population open to high-tech solutions. However, regulators must ensure these solutions are safe. A sandbox addresses this by allowing innovation under oversight, thereby aligning with Israel’s dual goals of innovation and patient safety. It’s explicitly seen as a positive step for healthcare innovation.

By learning from fintech, Israel acknowledges that supportive regulation can boost its tech sector (startups that went through sandboxes often gained easier access to funding and markets). Similarly, a health AI sandbox will likely make investors and hospitals more confident in trying new AI tools, knowing regulators are on board. This supports Israel’s economy and global status in AI.

Additionally, the sandbox fits into Israel’s approach of being a living lab for digital health. Israel’s health system (with large HMOs and digitized records) is a treasure trove for developing AI solutions. The sandbox will enable leveraging that in a responsible manner, which is a pillar of the national AI strategy: using Israel’s strengths (data and talent) while setting an example in governance. Indeed, an opinion piece in The Jerusalem Post stressed that any healthcare AI sandbox must rigorously address privacy, safety, and compliance – showing that thought leaders are pushing for a sandbox that enhances trust.

We can expect Israel to publicize the sandbox internationally as a model, potentially collaborating with other countries (maybe the UK’s MHRA or Singapore’s HSA) for best practices. In summary, Israel’s forthcoming sandbox is poised to be a key instrument in its national AI and digital health strategy, promoting responsible AI innovation and keeping Israel at the forefront of medical AI development.

Norway: Norwegian Medicines Agency (Statens Legemiddelverk)

Sandbox Existence and Status: Norway’s medicines regulator does not yet have a formal AI sandbox specifically for drug development or clinical trials. However, Norway is laying the groundwork for such innovative regulatory approaches through a cross-agency effort. In the Norwegian health sector, there is an ongoing “cross-agency regulatory guidance service” for AI that could evolve into a sandbox. Launched as part of a Joint AI plan for safe and effective use of AI in health services (2024–2025), this service provides coordinated guidance from multiple regulators to AI projects that face regulatory uncertainties (helsedirektoratet.no).

The Norwegian Directorate of Health leads it, with contributions from the Norwegian Medicines Agency (NoMA), the Norwegian Board of Health Supervision, and others. Essentially, if a hospital or company in Norway has an AI idea (say, using AI to detect adverse drug reactions from EHR data) and is unsure about legal aspects, they can apply to this guidance service. The regulators then jointly advise on how existing regulations apply and where there’s flexibility or need for change.

In 2024, this guidance service has already handled multiple queries, indicating growing demand. Importantly, Norway has signaled that if the need increases, they will consider expanding this into a true “regulatory sandbox.” The plan explicitly states that they will weigh turning the guidance service into a sandbox and possibly involve additional agencies as needed. Thus, Norway’s trajectory is incremental: start with guidance (to clarify current rules for AI) and move toward a sandbox (to pilot changes to rules) when warranted.

Meanwhile, Norway has another relevant sandbox: the Norwegian Data Protection Authority (Datatilsynet) AI sandbox, established in 2020. While focused on privacy law, it has run projects in healthcare (e.g., at Oslo University Hospital and Helse Bergen health system) to explore responsible use of AI with patient data (datatilsynet.no).

In those projects, multidisciplinary teams examined issues like bias in an AI analyzing EKGs and how to legally share data for AI development. The outcomes were published as “exit reports” with recommendations. Though this sandbox is under the privacy regulator, the Norwegian Medicines Agency likely follows these findings closely, as they touch on data and algorithmic fairness in clinical contexts – things crucial for approving AI-related trial methodologies. In sum, Norway doesn’t have a sandbox solely under NoMA, but the ecosystem of regulatory sandboxes (data protection, and potential health sandbox) is developing.

Key Features of Current Approach

The active cross-agency guidance service has some sandbox-like characteristics.

Scope

It covers any AI in health that spans multiple regulatory domains. For example, an AI clinical decision support tool might need to satisfy medical device rules, healthcare provider regulations, and data protection – the guidance team brings all these authorities together to advise the project. This breadth means it can address AI in clinical trials if issues cross domains (e.g., using health registries in an AI-driven trial recruitment tool would raise both data protection and trial regulation questions).

Operation

Stakeholders (companies, hospitals) submit an application for guidance. The service then provides targeted, case-specific advice. It’s a flexible, on-demand model rather than a fixed cohort program. The goal is to clarify existing regulations, propose necessary regulatory changes, and help introduce new EU regulations in the context of AI. This explicit mandate to propose regulatory changes is important – it shows Norway uses this as a learning mechanism to feed into policy updates.

Participants

The Norwegian Medicines Agency (Legemiddelverket) contributes “upon request” when cases involve its remit (medicines or medical devices). The Directorate of Health (which oversees health services and national e-health) is the coordinator, ensuring the guidance is holistic. The scheme is also adding the Radiation Safety Authority to cover AI in radiology, etc..

Expansion to Sandbox

If demand grows, the note says they’ll consider turning it into a sandbox and possibly rope in more agencies. A full sandbox would likely involve allowing some pilot projects to operate with regulatory waivers. For instance, if an AI for clinical decision support doesn’t neatly fit current rules, a sandbox could allow it to be tested in a limited way in a Norwegian hospital network, while regulators observe outcomes and adjust rules accordingly.

Use Cases and Engagement

Through the guidance service, Norway has already engaged with several AI projects (though specifics aren’t published, we know “a number of applications” were received in 2024). Some might involve AI in drug development. Norway has active research in AI for personalized medicine (e.g., AI to predict treatment responses from health registry data). It’s plausible that such projects sought guidance on how to use patient data or how to validate AI as a medical device. The guidance service also maintains a cross-agency information page on AI to share Q&As and clarifications gleaned from these consultations, thereby educating the wider sector. This transparency helps all stakeholders understand how regulators interpret current laws for AI.

Additionally, Norway’s participation in European networks means it might be indirectly part of sandbox initiatives. As an EEA member, Norway is aligned with EMA efforts and will likely implement the EU AI Act sandbox requirement. The Norwegian Medicines Agency is thus likely preparing to engage in an EU sandbox once the pharma legislation passes (even though Norway isn’t an EU member, it often mirrors EU drug regulations).

Stakeholder Engagement

Norway’s approach is collaborative and service-oriented. By setting up a multi-agency guidance service, it lowered barriers for stakeholders to approach regulators with novel ideas. This is a form of engagement that builds trust – innovators can discuss plans without immediate fear of regulatory rejection. The Norwegian authorities have been proactive in marketing the guidance service to ensure those who need it know it’s available. They’ve also considered setting up a network for lawyers working with AI to share knowledge, which suggests an open dialogue with the legal community on regulatory interpretation.

Public transparency is also valued: the plan is to update the cross-agency AI info page with clarifications gleaned, meaning the outcomes of guidance (anonymized lessons) are shared publicly. This is akin to publishing sandbox findings or FAQs.

In terms of broad strategy, the Norwegian government’s National AI Strategy (2020) explicitly supported creating regulatory sandboxes for AI, starting with the data protection one (regjeringen.no). So there is political support for sandboxing as a concept. The health authorities are methodically moving in that direction, ensuring they have clarity on current rules first (so they know exactly what needs changing in a sandbox scenario).

Outputs and Impact

From the guidance service itself, one output is improved clarity of regulations. When a project comes in, the regulators often realize where rules are ambiguous or outdated. They can then initiate work to fix that. The AI joint plan notes that the guidance has already been “useful specialist expertise which can help…clarify existing regulations, propose regulatory changes” (helsedirektoratet.no). So an impact is that Norwegian regulators are identifying needed updates. For example, if multiple guidance queries revolve around using AI to analyze health registry data for research, the regulators might create new guidelines on secondary use of health data for AI, or feed into law revisions on health registries.

NoMA itself, by being involved, is gaining experience. So when an AI-related drug trial application comes, they are better equipped to handle it. Also, Norway’s involvement in European projects (like the EU’s TEF Health AI testing facility, if participating) means any guidelines or standards emerging Europe-wide will be quickly absorbed into Norwegian practice.

If/when Norway upgrades to a full sandbox, expect outputs like sandbox pilot reports and policy changes. For example, Norway could pilot allowing an AI to be used in making treatment decisions in one hospital (with patient consent) that normally guidelines wouldn’t allow, and if that goes well, incorporate that into standard of care or device guidelines. A concrete future scenario: The Norwegian Medicines Agency might allow a small biotech to use an AI model to assist in dose selection during a Phase I trial under a sandbox agreement. The outcome might be a national guideline on using AI in early-phase trials.

Influence on National Strategy

Norway is known for a cautious, ethics-focused approach to technology (with strong social trust in institutions). The sandbox/guidance approach reflects this ethos: moving carefully, ensuring all voices (health, tech, ethics, legal) are coordinated, and scaling up only when ready. This collaborative model is part of Norway’s strategy to implement AI safely in public services like healthcare. It’s no surprise that Norway’s plan is named “safe and effective use of AI” – safety is paramount, but they also recognize the potential effectiveness gains. The sandbox concept (even if not yet realized) is baked into that plan as a way to foster innovation without compromising values.

Internationally, Norway can leverage its sandboxes to contribute to Nordic and European regulatory innovation. There’s Nordic cooperation in health tech; Norway’s insights from its guidance service might be shared with Sweden, Denmark, etc. Norway also often pilot-tests EU initiatives (for instance, they might volunteer to host an EU AI Act sandbox focusing on health since they have a head start with cross-agency work).

In summary, Norway’s medicines regulator is on a pathway towards a sandbox, currently emphasizing guided navigation of rules for AI developers. This measured approach is strengthening Norway’s capacity to regulate AI in drug development and will likely evolve into a more experimental sandbox when needed. It aligns with Norway’s broader AI strategy of enabling innovation in critical sectors like health while maintaining public trust and aligning with European frameworks.

Comparative Analysis of AI Sandboxes Across Regulators

The following table summarizes the six regulators’ sandboxes (or analogous initiatives) across five key dimensions, with a 1–5 scoring where 5 = highest/best and 1 = lowest/none in that category:

Depth/Specificity

The MHRA’s AI Airlock and the FDA’s ISTAND/PrecisionFDA initiatives score highest in terms of specifically targeting AI challenges in the medical domain. The MHRA explicitly branded its pilot as a regulatory sandbox for AI in healthcare devices, demonstrating a clear mandate to grapple with AI’s regulatory issues. FDA’s efforts, while not called “sandbox,” have dedicated significant attention to AI in drug development (e.g. workshops, the AI-centric ISTAND admissions). The EMA has signaled commitment through legislation, but since it’s not yet implemented, its depth is on paper for now.

Israel is on the cusp, planning a sandbox aimed at medical AI – its specificity is fairly high as it zeroes in on healthcare applications. Norway’s approach, being more general AI guidance in health, is somewhat less specifically about drug development sandboxes and more about overall AI regulatory coherence, giving it a middling score. SwissMedic currently lacks any specific sandbox or pilot focusing on AI in medicinal product development, hence the low score.

Scope of Experimentation: EMA’s proposed sandbox and Israel’s planned sandbox both envision a wide scope. EMA’s could handle any innovation that doesn’t fit rules – from AI in clinical trials to novel manufacturing – theoretically very expansive. Israel’s intent to accommodate fully autonomous AI in healthcare suggests a broad array of possible experiments (ranging across diagnostics, treatment planning, etc.).

The FDA’s programs allow many use cases (AI for trials, real-world evidence, nonclinical modeling), though in practice they integrate AI within existing submission frameworks rather than waiving rules entirely (fda.gov).

The MHRA’s Airlock is narrower by focusing on medical devices – which includes diagnostics and monitoring AI, but not, say, AI for drug molecule discovery or AI-driven manufacturing. Still, within clinical context, it spans multiple specialties (oncology, radiology, etc.). Norway’s guidance is flexible to many scenarios but currently limited to advice rather than live experimentation (helsedirektoratet.no).

Switzerland presently has no platform for experimental deviation from norms; any AI use must conform to existing regulations on drugs or devices.

Maturity

The regulators fall on a spectrum from operational to conceptual. The FDA has actively run AI-inclusive pilots for years (hence high maturity) – for example, ISTAND has accepted AI tools and is functioning in practice (fda.gov). MHRA’s sandbox just completed a pilot phase and is moving to a second, so it’s in between pilot and fully established – a solid mid-to-high maturity.

Norway’s initiative is operational as a guidance service but not a true sandbox yet, so its maturity is low-to-middling. Israel and EMA are forward-looking but not yet executing pilots (planned stage only, hence low maturity until they launch). SwissMedic has none in place, effectively no maturity in this regard.

Stakeholder Engagement

All regulators recognize the value of engaging stakeholders, but the UK and US stand out for their breadth and transparency. The UK involved industry from the get-go (open calls, public comms) and integrated healthcare system players into the sandbox. The US FDA has been very open via public meetings and democratized platforms like PrecisionFDA, plus multi-stakeholder drafting of guidances.

EMA has good engagement in developing policies (consultations on reflection paper, etc.), but since its sandbox isn’t active, real-time engagement in sandbox experiments hasn’t happened yet – still, its planning documents envision multi-actor input.

Israel is proactively seeking input (a good sign) and will likely partner with its tech sector closely. Norway’s approach is collaborative among agencies and receptive to those seeking guidance, but it’s somewhat reactive (waiting for applications) and not widely advertised beyond the health sector community.

SwissMedic has engaged globally and internally on AI, but hasn’t directly pulled in industry or public in a sandbox context, which limits its score.

Regulatory Impact

Already, FDA and MHRA show concrete regulatory impacts from their sandbox approaches. FDA’s pilot learnings have directly informed new draft guidance and aligned cross-center policies (fda.gov). The qualification of AI tools (like the depression severity AI) under ISTAND sets precedents for industry and expedites future approvals. The MHRA is clearly using sandbox results to shape its forthcoming medical device AI regulations (gov.uk), and the government’s funding of phase 2 indicates belief in its policy value.

EMA’s impact so far is modest but notable – e.g., the qualification of AIM-NASH is a regulatory decision showing EMA’s acceptance of AI-derived evidence (ema.europa.eu), and the reflection paper guides industry broadly.

Once the EMA sandbox is in play, its impact could become very high (if it leads to changes in trial authorization practices EU-wide, for instance).

Norway’s guidance service (helsedirektoratet.no) is gradually influencing policy thinking (they mention it will contribute to clarifying regulations and new EU regs implementation) but hasn’t yet led to published regulatory changes. Israel hasn’t had impact yet, but the expectation is that it will generate new guidelines or even legislative adjustments for medical AI – however, until the sandbox runs, this is prospective.

Switzerland’s regulatory stance on AI hasn’t significantly changed yet; most movement has been internal or aligning with external standards, so direct sandbox-driven reform hasn’t occurred.

In qualitative terms, one can see two broad patterns

Proactive Sandbox Leaders (UK, USA)

These regulators took early initiative to create environments for AI experimentation (even if under different names in FDA’s case) and are leveraging them to update regulatory paradigms. Their sandboxes have become part of their identity as innovation-friendly regulators, and they are influencing others (the UK in particular framing itself as a global pioneer).

Adaptive Planners (EU, Israel, Norway)

These are setting up or planning sandboxes in response to the recognition that future regulation must accommodate AI. They are a bit behind the leaders in implementation, but they may leapfrog once their frameworks kick in (especially EMA with a legal mandate across 30 countries, and Israel with its agile startup ecosystem support). Norway is a bit unique, being very measured and ensuring multi-agency alignment first.

Conservative/Indirect Approach (Switzerland)

Here, innovation is managed without a formal sandbox; SwissMedic relies on established pathways and international harmonization. Switzerland might be waiting to see outcomes from others before committing to its own sandbox. The risk is falling behind in attracting cutting-edge trials, but the benefit is avoiding premature regulatory experiments.

Influence on National AI Strategies in Biomedicine

Each sandbox (or lack thereof) feeds into its country’s broader strategy for AI in healthcare:

United States (FDA)

The FDA’s sandbox-like pilots reinforce the U.S. strategy of integrating AI under existing health product frameworks. Rather than separate AI laws, the U.S. uses FDA’s expertise to shape AI norms. This ensures that as AI becomes more prevalent in drug development (e.g. AI-designed drugs or AI-run trials), the regulatory science to evaluate them is already in development (fda.gov). It also signals to the biopharma industry that FDA is not a roadblock but a partner in innovation, aligning with the national aim to be a leader in AI-driven medicine.

European Union (EMA)

The move to include a sandbox in pharmaceutical legislation is a strategic shift for the EU, which historically had very prescriptive rules. It indicates a recognition that too-rigid regulations could stifle AI innovations in drug development. By allowing controlled exceptions, the EU strategy is to learn and adapt – a more dynamic regulatory stance than before. This is complementary to the EU AI Act which places heavy obligations on high-risk AI; the sandboxes provide a counterbalance to enable innovation within that strict regime.

In essence, the EMA sandbox will be a tool to future-proof EU pharma regulation against fast AI technological cycles.

United Kingdom (MHRA)

The sandbox is central to the UK’s vision of a post-Brexit “innovation nation” in life sciences. It directly supports the government’s Life Sciences Strategy and the NHS digital transformation goals. The fact that ministers are championing it shows it’s more than a regulatory experiment; it’s an industrial strategy pillar. If the AI Airlock yields faster routes to market for safe AI tech, it strengthens the UK’s case that its regulatory environment is world-class and more nimble than the EU’s. This competitive positioning is important in attracting investment and trials to the UK.

Additionally, by involving the NHS, it ensures alignment with national health priorities (like reducing diagnostic backlogs via AI) – so the sandbox influences not just regulation but health policy implementation.

Switzerland (SwissMedic)